Computational Linux Cluster

BIAC's computational cluster has 32 blades with a total of 720 Intel Xeon processor cores and 5 TB of memory. The cluster is based on RHEL 9 and is running Son of Grid Engine for job scheduling. The nodes (not including login nodes) are diskless and operate with NFS-mounted /root and /home directories residing on BIAC storage server and managed via oneSIS. Each node is running the same disk image, with individual node differences handled through NFS mounts and RAM disk elements.

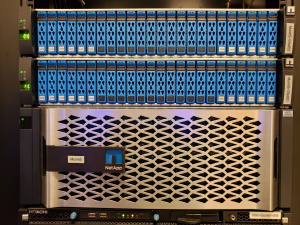

All-Flash Data Storage Server

BIAC has a NetApp AFF-A800 storage server, providing 200 TB of enterprise class disk storage. The NetApp AFF-A800 is configured as a dual-node high-availability cluster with all flash storage, which provides extremely high performance and reliability. BIAC's storage servers (Munin and Munin2), are connected to the network via four 10-Gigabit Ethernet connections. Munin provides centralized data storage for the BIAC Cluster and all of the BIAC network.

High-Speed Network

BIAC's network consists of two data servers and approximately 100 Windows and Linux workstations connected by Gigabit Ethernet Cisco switches. BIAC's network is connected directly to the 10-Gigabit Duke Medical Center network backbone via full-duplex Gigabit Ethernet to a Cisco data center switch. The Medical Center backbone is connected to the University network (DukeNet) backbone also via a Cisco data center switch. DukeNet is connected to the North Carolina Networking Initiative's (NCNI) ultra-high-performance fiber-optic ring network, which connects Duke to MCNC at OC-48 speed (2.5 Gbits/sec). MCNC/NCNI provides connections to the Internet, Internet 2 (Abilene), and VBNS+.

Data Analysis Laboratories

BIAC has two data analysis laboratories that are available to collaborating investigators and students, one in Duke Hospital South and one in Duke Hospital North.

BIAC Data Security Overview